The Use of AI Bots to Discredit Ukraine

The Growing Bot and Disinformation Landscape

The spread of AI-generated disinformation is increasing rapidly, and Ukraine has become a primary target. As cited in a recent story by Axios, foreign adversaries like Russia and China are already using generative AI to create fake identities, produce content that seems real, and flood social media platforms on an unprecedented scale. Experts warn that this technology allows adversaries to both develop and deliver content at a speed and scale we’ve never seen before. Given this situation, Ukraine has become a major target, with bot networks repeating Kremlin messaging and using AI tools to spread it across languages and platforms.

Executive Summary

At PeakMetrics, we analyzed a sample of 5,780 social media posts aimed at discrediting Ukraine over the past month. Of these, 28.2% were driven by bots, rated “Likely” or higher. The activity reused familiar Kremlin messages—corruption, war crimes, and accusations against Zelensky’s legitimacy—while employing AI-driven amplification tactics. The purpose is clear: weaken confidence in Ukraine’s government, reduce Western support, and frame Ukraine as a broken partner. Based on the alignment with recognized Kremlin narratives and behaviors, PeakMetrics identified this activity as sponsored by the Russian state.

Why It Matters

Generative AI has made influence operations nearly cost-free. It doesn’t need perfect arguments—just high volumes of content that blend into everyday social media noise. This is what makes it effective: sheer scale, speed, and saturation. These campaigns aim to make fringe ideas, such as conspiracy theories or extreme political views, seem mainstream. Coordinated bot activity helps these narratives enter wider discussions faster than ever before.

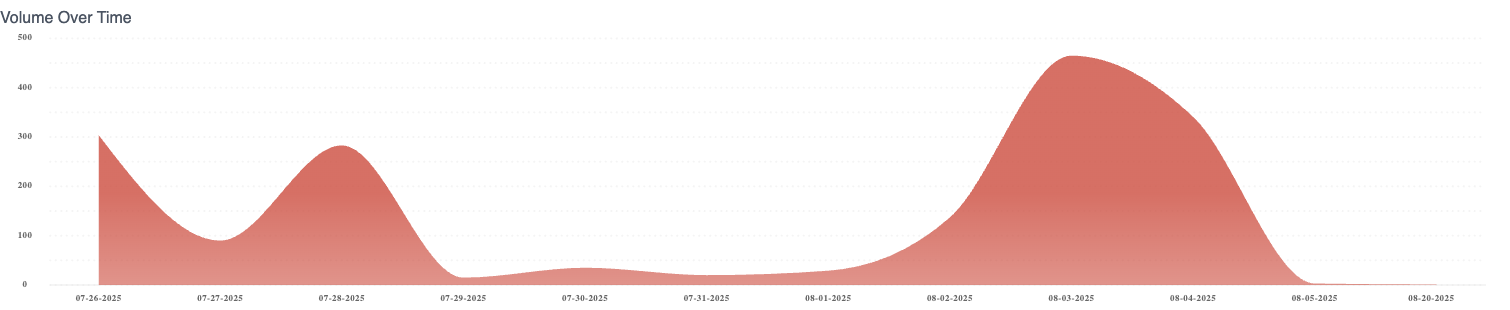

Findings from the Data

Most bot activity took place on X (Twitter), while Reddit followed, and Instagram contributed a smaller share.

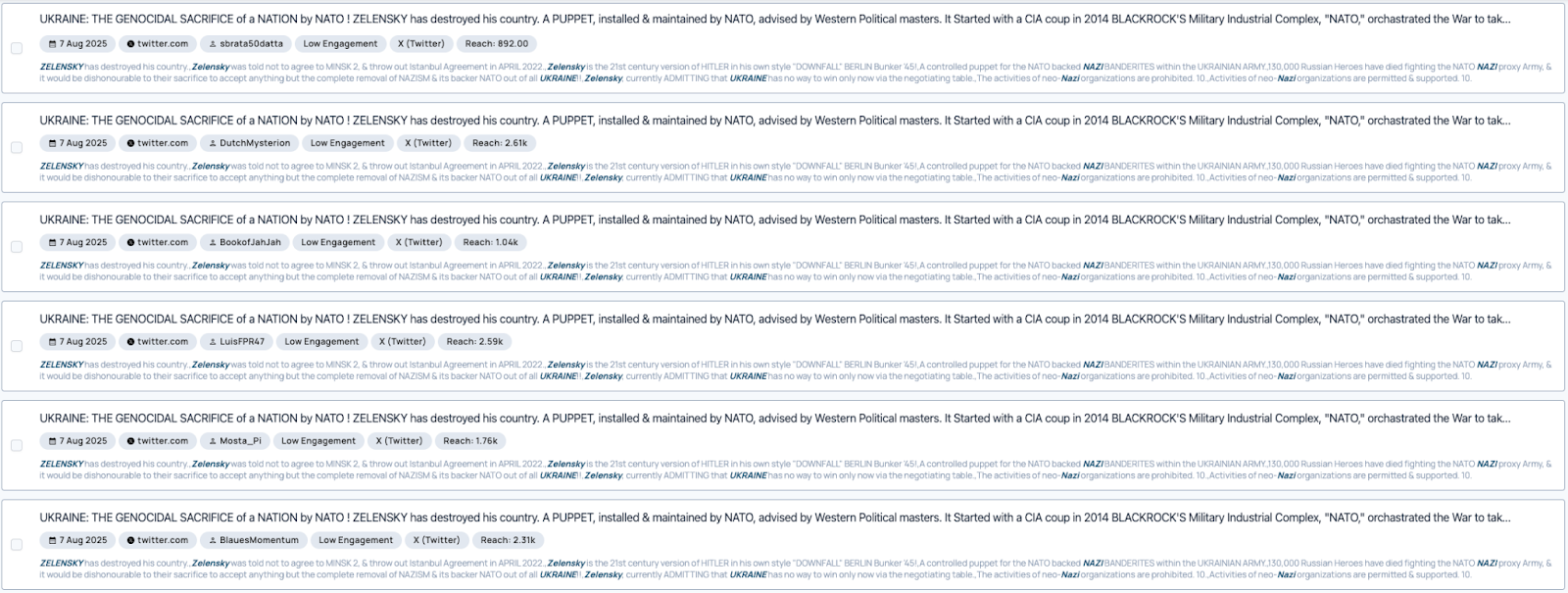

The narratives themselves consistently reflected Kremlin propaganda themes:

- Corruption and money laundering allegations (≈28% of the conversation)

- Nazi and denazification tropes (≈30% of the conversation)

- NATO “sacrificing” Ukraine (≈14% of the conversation)

- Refugee- or migrant-related crime allegations (≈17% of the conversation)

- War crimes framing (≈11% of the conversation)

- Zelensky’s illegitimacy (smaller overall but highly concentrated in a burst of 123 posts on Aug. 24, repeating the same sentence in one day)

Language analysis showed intentional targeting. English dominated with around 74%, while French made up a significant 23%, showing focused cross-language efforts. Duplication patterns revealed coordination: 38% of bot posts were exact duplicates, and another 41% were reposts. Bot ratings confirmed the presence of automation, with 6.8% “Almost Certain,” 10.5% “Very Likely,” and 10.9% “Likely.”

The Role of Generative AI

Generative AI enhances these campaigns in four key ways. First, it enables large-scale and fast operations, allowing networks to generate posts in bulk and automate engagement. Second, it creates “backstopping,” using AI-generated profile photos and bios to give fake accounts a sense of legitimacy. Third, it significantly cuts the costs of multilingual campaigns, as seen in the English–French narrative replication in our dataset. Finally, it supports a flood of content, enabling operators to mass-produce not just text but also images, videos, and websites that overwhelm social media feeds.

Importantly, generative AI doesn’t need to be flawless to be effective. Its strength lies in reducing cost and effort, allowing bot networks to inundate discussions with localized variations. The most effective countermeasures focus less on whether a specific post is AI-generated and more on patterns like duplication, timing, network connections, and narrative clustering—all of which were evident in this dataset.

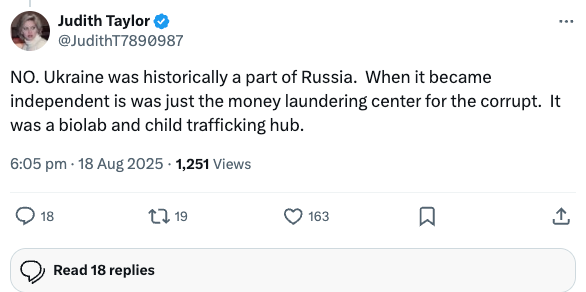

Narrative Spotlight: Ukraine's Battle Against Corruption and War Crimes Amidst Shifting International Alliances

A leading bot-driven narrative presented Ukraine as highly corrupt and accused it of war crimes while questioning its ties with NATO and the West. This approach seeks to divert attention from Russian aggression and instead suggests that Ukraine is morally compromised and geopolitically unstable. This example post folds claims about Ukraine’s corruption into other recurring conspiracies — mentioning biolabs (a hallmark of COVID and bioweapons conspiracies) and child trafficking (related to Epstein and QAnon conspiracies). This is a common seeding tactic to spread false, misleading narratives.

Conclusion

The disinformation battlefield is no longer confined to trolls and paid bot farms. With generative AI, adversaries can launch influence campaigns at new speeds and scales. Our analysis of bot activity targeting Ukraine shows that these tactics are not just theoretical—they are actively shaping global discussions today.

Countering these campaigns requires increasing focus beyond text analysis to track coordination patterns, duplication, and narrative amplification. By uncovering these behavioral signals, governments, platforms, and organizations can push back against manipulation before it becomes ingrained in public discourse.

Sign up for our newsletter

Get the latest updates and publishings from the PeakMetrics investigations team.